|

Biodiversity Data Journal :

Methods

|

|

Corresponding author:

Academic editor: Edward Baker

Received: 16 Aug 2016 | Accepted: 01 Dec 2016 | Published: 06 Dec 2016

© 2016 Tavis Forrester, Tim O'Brien, Eric Fegraus, Patrick Jansen, Jonathan Palmer, Roland Kays, Jorge Ahumada, Beth Stern, William McShea

This is an open access article distributed under the terms of the Creative Commons Attribution License (CC BY 4.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Citation:

Forrester T, O'Brien T, Fegraus E, Jansen P, Palmer J, Kays R, Ahumada J, Stern B, McShea W (2016) An Open Standard for Camera Trap Data. Biodiversity Data Journal 4: e10197. https://doi.org/10.3897/BDJ.4.e10197

|

|

Abstract

Camera traps that capture photos of animals are a valuable tool for monitoring biodiversity. The use of camera traps is rapidly increasing and there is an urgent need for standardization to facilitate data management, reporting and data sharing. Here we offer the Camera Trap Metadata Standard as an open data standard for storing and sharing camera trap data, developed by experts from a variety of organizations. The standard captures information necessary to share data between projects and offers a foundation for collecting the more detailed data needed for advanced analysis. The data standard captures information about study design, the type of camera used, and the location and species names for all detections in a standardized way. This information is critical for accurately assessing results from individual camera trapping projects and for combining data from multiple studies for meta-analysis. This data standard is an important step in aligning camera trapping surveys with best practices in data-intensive science. Ecology is moving rapidly into the realm of big data, and central data repositories are becoming a critical tool and are emerging for camera trap data. This data standard will help researchers standardize data terms, align past data to new repositories, and provide a framework for utilizing data across repositories and research projects to advance animal ecology and conservation.

Keywords

big data; biodiversity; camera trap; data repository; data schema

Introduction

Accurately surveying and monitoring animal communities is an essential part of wildlife management and conservation (

While camera traps have limits as a survey tool (

The difficulties of aggregating data among camera-trapping experts affiliated with a variety of organizations, including the Smithsonian Institution, the Wildlife Conservation Society, Conservation International, and the North Carolina Museum of Natural Sciences, directly led to the creation of this data standard. Researchers found that the use of different authorities for species names, inconsistent recording of habitat features, differing levels of recorded data regarding camera deployments, and most recently the tagging of single photos vs. photo bursts, all created problems when attempting to combine data. The lessons learned from large scale monitoring projects such as the Tropical Ecology Assessment and Monitoring network (TEAM) (

Here we present the Camera Trap Metadata Standard (CTMS). This data standard offers a common data format to facilitate data storage and sharing. The standard also provides a structure for researchers to manage their data. Finally the standard is an essential step to providing access to data through web services and other automated methods, an essential element of providing open access to research data (

Description of the Data Standard

We categorize camera-trap data as hierarchical and in four levels (Project, Deployment, Image Sequence, and Image). The terms used in the standard are: (1) A Project is a scientific study that has a certain objective, defined methods, and a defined boundary in space and time. (2) A camera Deployment is a unique placement of a camera trap in space and time. (3) An image Sequence is a group of images that are all captured by a single detection event, defined as all pictures taken within 60 seconds of the previous picture or another time period defined by the Project. A sequence can either be a burst of photographs or a video clip. (4) A camera-trap Image is an individual image captured by a camera trap, which may be part of a multi-image sequence.

The data standard describes data relating to camera trap projects with 35 different fields across the four levels (Suppl. material

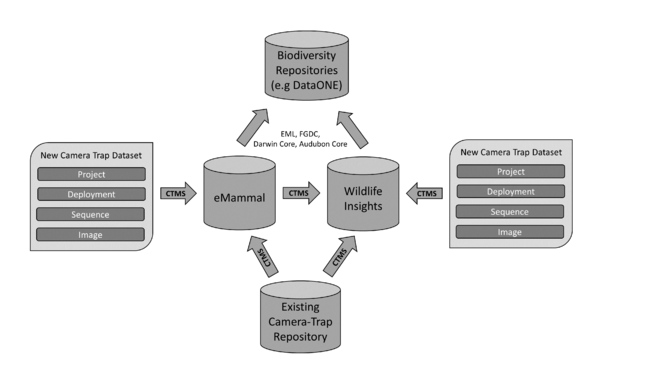

The standard is compatible with the Federal Geographic Data Committee (FGDC), the Darwin Core (TDWG), the Ecological Metadata Language (EML), and the Audubon Core metadata standards to allow data to be easily cross referenced with existing data repositories, such as DataONE (Table

|

Authority Name |

Description |

Link to Resource |

|

EAC-CPF |

Encoded Archival Context for Corporations, Persons and Families |

|

|

Darwin Core |

Data standard for describing and sharing biodiversity information. |

|

|

FGDC-Biological Profile |

Describes Federal Geospatial datasets. |

|

|

Ecological Metadata Language (EML) |

The Ecological Metadata Language (EML) is a metadata standard developed for the ecology discipline. |

http://knb.ecoinformatics.org/#external/emlparser/docs/eml-2.1.1/index.html |

|

Audubon Core |

The Audubon Core is a set of vocabularies designed to represent metadata for biodiversity multimedia resources and collections. |

Discussion

The data standard has been used to import and store data from multiple Smithsonian projects directed by different researchers, combine data from several large scale citizen-science projects (

Animal ecology is rapidly becoming a data-intensive science along with other branches of ecology (

The data standard described here will be updated and maintained by the Wildlife Insights: Camera Trap Data Network (WI), a collaboration between the Smithsonian, the Wildlife Conservation Society, Conservation International, and the North Carolina Museum of Natural Sciences. The CTMS and associated templates (e.g., in XML and JSON, see (Suppl. material

The use of standard data schemas will also allow camera trap data to be stored and archived in open data repositories, an increasingly important resource in modern ecological science (

The world is rapidly changing, and the pace of ecological change has outstripped the typical pace of scientific inquiry. The technologies of camera trapping and online data repositories offer a powerful tool so that scientists may provide rapid analysis and governments and conservation organizations may use this data to quickly respond to developement and change.

Acknowledgements

This research was partially funded by NSF Grant #1232442. We thank R. Costello for his work on establishing eMammal and Wildlife Insights and D. Davis for many discussions on data management and databases. T. O’Brien and E. Fegraus thank the Gordon and Betty Moore Foundation for funding to federate camera-trap data.

Funding program

National Science Foundation Grant #1232442 and the Gordon and Betty Moore Foundation.

Grant title

National Science Foundation Grant #1232442

References

- Monitoring the Status and Trends of Tropical Forest Terrestrial Vertebrate Communities from Camera Trap Data: A Tool for Conservation.PLoS ONE8:e73707. https://doi.org/10.1371/journal.pone.0073707

- REVIEW: Wildlife camera trapping: a review and recommendations for linking surveys to ecological processes.Journal of Applied Ecology52:675‑685. https://doi.org/10.1111/1365-2664.12432

- Data acquisition and management software for camera trap data: A case study from the TEAM Network.Ecological Informatics6:345‑353. https://doi.org/10.1016/j.ecoinf.2011.06.003

- Ecological data in the Information Age.Frontiers in Ecology and the Environment10:59‑59. https://doi.org/10.1890/1540-9295-10.2.59

- Big data and the future of ecology.Frontiers in Ecology and the Environment11:156‑162. https://doi.org/10.1890/120103

- Automatic Storage and Analysis of Camera Trap Data.Bulletin of the Ecological Society of America91:352‑360. https://doi.org/10.1890/0012-9623-91.3.352

- The Fourth Paradigm: Data-Intensive Scientific Discovery.Microsoft,284pp.

- CPW Photo Warehouse: a custom database to facilitate archiving, identifying, summarizing and managing photo data collected from camera traps.Methods in Ecology and Evolution7:499‑504. https://doi.org/10.1111/2041-210X.12503

- Data-intensive Science: A New Paradigm for Biodiversity Studies.BioScience59:613‑620. https://doi.org/10.1525/bio.2009.59.7.12

- Software for minimalistic data management in large camera trap studies.Ecological Informatics24:11‑16. https://doi.org/10.1016/j.ecoinf.2014.06.004

- Noninvasive Survey Methods for Carnivores.Island Press,400pp.

- Volunteer-run cameras as distributed sensors for macrosystem mammal research.Landscape Ecology31:55‑66. https://doi.org/10.1007/s10980-015-0262-9

- The pitfalls of wildlife camera trapping as a survey tool in Australia.Australian Mammalogy37:13‑22. https://doi.org/10.1071/am14023

- Ecoinformatics: supporting ecology as a data-intensive science.Trends in Ecology & Evolution27:85‑93. https://doi.org/10.1016/j.tree.2011.11.016

- Monitoring for conservation.Trends in Ecology & Evolution21:668‑673. https://doi.org/10.1016/j.tree.2006.08.007

- camtrapR: an R package for efficient camera trap data management.Methods in Ecology and EvolutionOnlinehttps://doi.org/10.1111/2041-210X.12600

- Challenges and Opportunities of Open Data in Ecology.Science331(6018):703‑705. https://doi.org/10.1126/science.1197962

- Surveys using camera traps: are we looking to a brighter future?Animal Conservation11(3):185‑186. https://doi.org/10.1111/j.1469-1795.2008.00180.x

- Snapshot Serengeti, high-frequency annotated camera trap images of 40 mammalian species in an African savanna.Scientific Data2:150026. https://doi.org/10.1038/sdata.2015.26

- Camera Base. URL: http://www.atrium-biodiversity.org/tools/camerabase/

Supplementary materials

Camera Trap Metadata Standard. Standards for camera-trap data captured in 35 fields at four hierarchical levels. All data fields are cross-referenced to common ecological metadata standards where possible. Projects may use either sections 3 (image sequence data) or 4 (image data) or both, depending how data is collected.

A sample template of the camera trap metadata standard in XML.

A sample template of the camera trap metadata standard in JSON